Semantics Chronicles

Semantic Learning and Vocabulary Structure

One morning, I was doing some research on some of the terms we through around in the Semantic word, in particular “subsumption”. This led to a web site with lots of information about learning theories[1]. Learning is a complex process which many dedicate their entire academic and professional lives to—so this paper will not even attempt to pose theories. What this paper does do is look at the Ausubel perspective on cognitive subsumption and compares that with vocabulary development.

According to Ausubel’s Subsumption Theory:

To subsume is to incorporate new material into one's cognitive structures. … When information is subsumed into the learner's cognitive structure it is organized hierarchically. New material can be subsumed in two different ways, and for both of these, no meaningful learning takes place unless a stable cognitive structure exists.

This seems to imply that to learn about a vocabulary term, one must have a stable, hierarchical cognitive structure. For shared learning, then, the structure must be a shared structure. This sets out a goal for an enterprise vocabulary:

GOAL: a vocabulary that provides stable cognitive structure for enterprise learning about information requirements.

In order for the structure to remain stable, we must understand how new terms will be added to it. Ausubel proposed four processes of meaningful learning[2]:

Derivative subsumption: this describes the situation in which the new information I learn is an instance or example of a concept that I have already learned.

· So, let's suppose I have acquired a basic concept such as "tree". I know that a tree has a trunk, branches, green leaves, and may have some kind of fruit, and that, when fully grown is likely to be at least 12 feet tall.

· Now I learn about a kind of tree that I have never seen before, let's say a persimmon tree that conforms to my previous understanding of tree.

· My new knowledge of persimmon trees is attached to my concept of tree, without substantially altering that concept in any way.

So, an Ausubelian would say that I had learned about persimmon trees through the process of derivative subsumption.

In a vocabulary example, let’s suppose:

In a vocabulary example, let’s suppose:

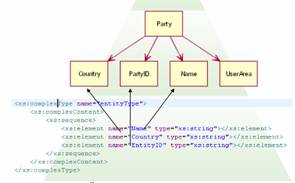

• Our vocabulary already has a fully defined component for “Party”.

• We are trying learn about a new schema with a complex type called “entity”

To handle this case, we simply:

- Convert the entity type to a vocabulary component

- Model “entity” fields against the “Party” semantic content model

We have taught the vocabulary—and all of its users—about entity from a new dialect.

Correlative subsumption: this describes the situation in which the new information I learn extends a concept that I have already learned.

· Now, let's suppose I encounter a new kind of tree that has red leaves, rather than green.

· In order to accommodate this new information, I have to alter or extend my concept of tree to include the possibility of red leaves.

I have learned about this new kind of tree through the process of correlative subsumption. In a sense, you might say that this is more "valuable" learning than that of derivative subsumption, since it enriches the higher-level concept.

In a vocabulary example, let’s suppose:

In a vocabulary example, let’s suppose:

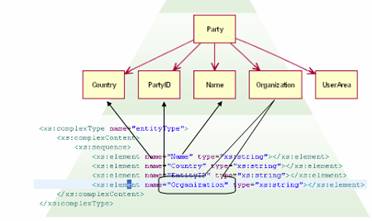

• Our vocabulary already has a fully defined component for “Party”.

• From a new schema we find that the “entity” is similar to Party except it has an additional property. It includes a description of what organization the party belongs to.

To handle this case, we simply:

- Convert the entity type to a vocabulary component

- Model “entity” fields against the “Party” semantic content model

- Extend “Party” to include an organization property.

Superordinate learning: this describes the situation in which the new information I learn is a concept that relates known examples of a concept.

– Imagine that I was well acquainted with maples, oaks, apple trees, etc., but I did not know, until I was taught, that these were all examples of deciduous trees.

– In this case, I already knew a lot of examples of the concept, but I did not know the concept itself until it was taught to me. This is superordinate learning.

In our example vocabulary:

•

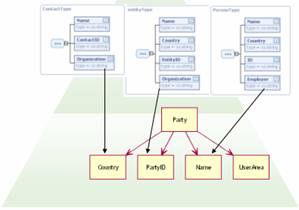

There is no “Party” component

There is no “Party” component

• We have 3 schemas that have complex types that describe a person or entity

To handle this case, we:

- Convert schemas to components

- Create Party Semantic

Component and Term - Model all three new components

against the new “Party” Semantic Component

Here, we have used to vocabulary to establish relationships between three different representations of the same concept.

Combinatorial learning: the first three learning processes all involve new information that "attaches" to a hierarchy at a level that is either below or above previously acquired knowledge.

• For example, to teach someone about pollination in plants, you might relate it to previously acquired knowledge of how fish eggs are fertilized.

Combinatorial learning is different; it describes a process by which the new idea is derived from another idea that is neither higher nor lower in the hierarchy, but at the same level (in a different, but related, "branch"). You could think of this as learning by analogy.

In our vocabulary example:

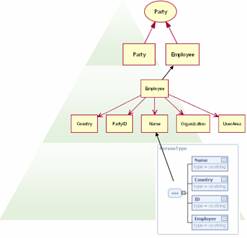

- We have a full component for “Party”

- We have new schema type that describes a person

- Convert schema to components

- Compare new component to “Party”

- Decide the “employer” field

makes this a different type of

Party than the general case - Create a new semantic component for employee

- Assign Party Term

- Map semantic components

Here, by using the same term we have used the layers of abstraction to identify the fact that there are similarities between “Party” and “Employee” but they must have different data representations.

Conclusion

Learning, especially group learning, is dependent on a stable structure and controlled processes for adding newly learned concepts to that structure. A vocabulary structure that does not account for all four learning styles will become brittle over time as new concepts are disorganized or forgotten.

The vocabulary structured used in the Contivo Vocabulary Modeler can support all four learning styles in a structure that can be easily shared and managed for group learning.